Published in AI & Technology

Why Yann LeCun Says LLMs Are Dead and What Business Leaders Should Do About It

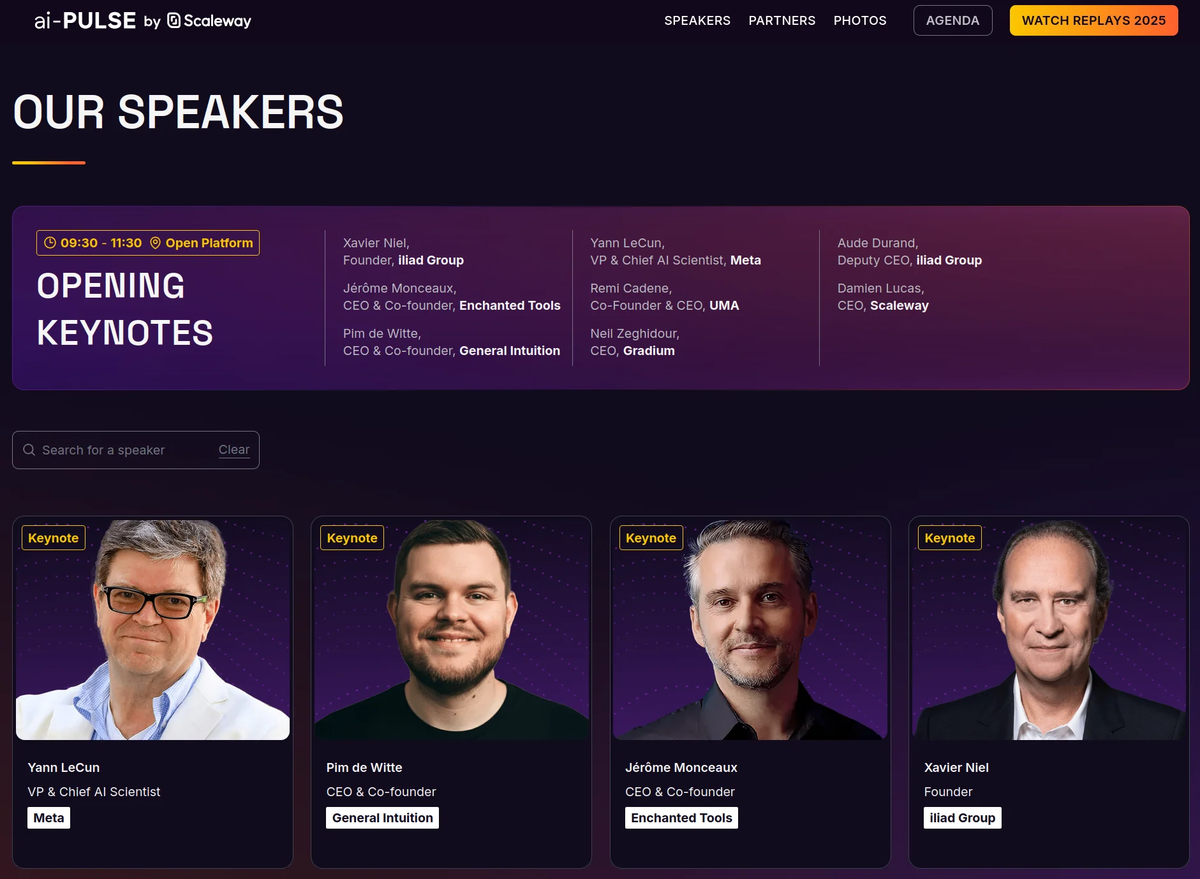

At 2025 Decembers's aiPULSE2025 in Paris, Yann LeCun delivered a clear message to the business community: the AI systems dominating today's headlines represent a dead end.

I didn't go to aiPULSE2025 but listened in to Yann's keynote while I was on my morning run in Bukit Cina. This is a write up about the gist of that keynote and some thoughts of my own.

Yann was on stage with Pim de Witte, the CEO & Co-founder of General Intuition and the session was moderated by Aude Durand, chairperson for Scaleway

Yann LeCun is a French-American computer scientist recognized as one of the "Godfathers of AI." He received the 2018 Turing Award for pioneering deep learning—the technology powering today's AI revolution. After developing early image recognition systems at Bell Labs that automated bank check processing, he joined New York University as a professor in 2003.

In 2013, he became Chief AI Scientist at Meta, building the company's AI research lab for over a decade. In November 2025, he left Meta to launch a startup focused on next-generation AI architectures. His work enables modern business applications from computer vision to generative AI.

Large Language Models, despite their impressive capabilities, are "a little bit like snowballs," as co-speaker Pim de Witte explained. They roll downhill, accumulating mass, but have no perception of the world around them. They don't know when they're about to crash into something because their entire world is just themselves.

This isn't theoretical speculation. The limitations are already showing up.

The AI That's Not Intelligent Enough

Our best AI systems can pass the bar exam, compose poetry, and win international math olympiads. Yet we still don't have robots that can do what a five-year-old does, and we certainly don't have AI that can learn to drive in a few dozen hours like any teenager. Something essential is missing.

From Snowballs to Olaf: The World Model Difference

Pim de Witte's analogy from the movie Frozen clarifies the gap. LLMs are snowballs—autoregressive, accumulating tokens, with no perception. Real intelligence is more like Olaf, the sentient snowman who can see stones ahead and dodge them.

World models, Yann's proposed alternative, work differently. They don't just predict the next token or frame. They generate entire distributions of possible outcomes based on actions you might take. This requires something LLMs lack: interaction data.

Think about when you dream. You can't really interact with what you're seeing—you're a bystander. That's passive video. A world model needs action and interaction data layered on top of video representations to build general intuition of environments. Yann says that video has much more context and represents the world better than the text based LLMs we currently have.

This is why "video game datasets are so valuable", says Yann. They come with ground-truth action labels already attached. Meta, for example, does about a billion video uploads per year with these action labels. This makes training "so compute efficient" because you can go straight into predicting actions without costly labeling steps.

The Data Economics That Favor World Models

Here's where the business case becomes compelling. All the publicly available text on the internet used to train typical LLMs amounts to about 10^14 bytes—30 trillion tokens. You can get that same amount from video very easily.

Even compressing video to low frame rates, 10^14 bytes equals roughly 30 minutes of YouTube uploads. That's the same visual information a four-year-old sees in their entire lifetime. Meta's VJPA2 model trained on 100 years of video using just a few thousand GPUs—nothing like the gigantic scales required for top-of-the-line LLMs.

"You get the best of both worlds," Yann explained. "More data, smaller models, and in the end they are smarter. They have some level of common sense."

For businesses, this means world models require less compute while delivering capabilities for physical tasks that LLMs simply cannot match.

Europe's Unexpected Advantage

Both speakers emphasized that Europe is uniquely positioned for this shift. Pim noted it's been "much easier to find great people who are here, who are with us today, in Europe than it has been in the US." Many American companies remain "very LLM-pilled," focused on scaling existing architectures rather than exploring alternatives.

Yann attributed this European advantage to FAIR's influence: "A lot of the talent for world modeling is in Europe. I think a lot of that is due to the work that you did at FAIR and how you educated people." He contrasted the US "scale" mentality with Europe's suitability for fundamental research.

This creates a strategic opportunity. While US companies close their research and Chinese models carry geopolitical baggage, European labs can own the "truly open" middle ground—building models that are easily modifiable without political preconditioning.

The Open Source Imperative

Yann didn't mince words about the current state of AI openness. "Open AI has stopped being open quite a long time ago. Anthropic never was. Google is kind of somewhere in between." Meanwhile, "China is going open source all the way," but this creates resistance because people don't want models "preconditioned to conform to the political view of the Chinese government."

Open research, he argued, is the best way to make fast progress and attract the best scientists. FAIR's openness forced even DeepMind to become more open. On the cusp of a new revolution, contributions from everyone are needed.

For businesses, this means the tools you adopt today should prioritize transparency and modifiability. Closed systems may offer short-term convenience but create long-term dependency risks.

In our work at Kafkai, we actively use non-US models, not as alternative but as our default. All our use case and our customers use case are not political in nature, and the open source models by the Chinese and the French are really, really good and give much better ROI. The point that we keep in mind is where our data is going. If it's open-source models small enough, we run it in-house. If not, we make sure it goes to a place where there are trusted data protections in place.

Real-World Applications Already Emerging

The industrial applications are where world models show immediate promise. Yann gave an example of a turbo jet engine with 1,000 sensors. Nobody has a complete phenomenological model of that engine. Think about steel mills or manufacturing plants with complex sensor arrays. How do you build a model of not just a robot, but its interaction with the environment?

World models can create complete digital twins of these systems, enabling predictive maintenance and process optimization that LLMs cannot touch. For manufacturers, this isn't something futuristic anymore: It's a competitive necessity.

What Business Leaders Should Actually Do

The transition from LLMs to world models won't happen overnight, but the foundation is being laid now because people like Yann are working on alternatives to address the limits of text based LLMs.

After listening to the keynote, here are 5 actionable steps small and medium businesses can take to prepare for the world model paradigm shift which I think we can think about and take action on:

1. Start Collecting Action-Labeled Data Now. It's Your Future Moat

The speakers identified a critical shortage of ground-truth action data as the main bottleneck for training world models. If your business operates in manufacturing, logistics, robotics, or any sensor-rich environment, begin systematically logging not just video/sensor streams, but the actions that cause state changes:

- Tag machine operations with operator actions (e.g., "valve opened," "temperature increased")

- Record human demonstrations of tasks alongside video footage

- Use affordable devices (smart glasses, IoT sensors) to capture real-world interaction data

Opportunity: While LLM companies struggle with scarce text data, SMEs can build proprietary action datasets that become strategic assets for training specialized world models.

2. Upskill Your Technical Team in Video Processing Fundamental

World models operate in the "world of pixels," not tokens. The speakers emphasized that learning video encoders, FFmpeg, and video infrastructure will become as essential as understanding code is today. For resource-constrained SMEs:

- Send engineers to workshops on computer vision and video encoding

- Hire talent with FFmpeg/OpenCV experience (more abundant and affordable than LLM specialists)

- Experiment with existing video models (like Meta's V-JEPA) to understand embedding generation

Advantage: This skill set is currently undervalued compared to LLM expertise, giving early adopters access to top talent at lower costs.

If you're already working with videos in some form or another, then I think this is something which you should think and plan about seriously.

3. Target Vertical Applications Where LLMs Fail

The keynote highlighted that our best AI can pass the bar exam but can't match a five-year-old's physical reasoning. This creates greenfield opportunities for SMEs in:

- Manufacturing: Predictive maintenance for complex machinery (e.g., jet engines with 1,000+ sensors)

- Industrial automation: Process optimization in steel mills, chemical plants, or assembly lines

- Robotics: Systems that learn from demonstration in constrained environments

Strategy: Focus on narrow, high-value physical domains where you have domain expertise and data access—areas too specialized for Big Tech's horizontal LLM approach.

Kafkai does something similar, although we're not in a physical domain.

We're focusing on two key areas where LLMs fall short: 1) competitive intelligence, which requires accurate contextual data for comparison, and 2) the integration process needed to transform raw output into a final product. I won't delve into the details of these areas in this post to avoid unnecessary complexity, but feel free to request more information.

4. Leverage the Compute Efficiency Advantage

World models require "a few thousand GPUs, nothing like the gigantic scales required for top-of-the-line LLMs." This democratization means:

- Training costs are orders of magnitude lower than LLMs

- You can fine-tune existing world models on your proprietary data without massive infrastructure

- Cloud GPU rental becomes economically viable for extended training runs

Action: Start pilot projects now using available world model architectures (JEPA variants) on commodity GPU clusters. The compute economics favor experimentation.

5. Join Europe's Open Research Ecosystem Before It Closes

This last one is somesort of a sales pitch by Yann and the whole panelist but the speakers did warn us that "most big American players are climbing up" (becoming closed), while Chinese open models carry geopolitical risks. Europe is positioned as the "truly open" hub for world model research.

So I think if you're an SME thinking of global expansion, you should:

- Partner with European research institutions (like Pim's team)

- Contribute to open datasets and publish foundational work to attract global talent

- Participate in collaborative projects that share upstream research while keeping product implementations proprietary

Strategic Value: Open collaboration accelerates progress and attracts top scientists—creating a talent pipeline and innovation network that closed competitors cannot access.

Key Shift: The move from LLMs to world models marks a significant change, comparable to the shift from symbolic AI to deep learning. I think the more nimble and agile small and medium-sized businesses that proactively gather data on actions, develop video processing skills, and focus on real-world applications can secure leading roles before the market matures.

The Bottom Line

Yann's message was blunt: "I've always thought that was BS, if you pardon my French," referring to claims that scaling current technology will achieve human-level intelligence. The path forward demands a fundamental architectural shift.

For businesses, this isn’t about abandoning LLMs immediately. That is in fact impossible with how far we have gone to utilizing them. It’s about recognizing their limitations and starting to prepare for what follows. The companies that succeed will combine AI efficiency with human expertise, cultivate genuine communities, and create interactive data assets that world models can learn from.

The era of rapid progress is ending. The question is whether your business will be ready when Olaf arrives.

Related Articles

How Fast Can Pictory AI Turn a Blog Post Into a Video (Part 2)?

A practical, business-focused look at how fast Pictory AI can turn a blog post into a video, based on real workflows, realistic timelines, and where automation truly adds value for modern content teams

Pictory AI in 2026: What It Is, How It Works, and Whether It’s Worth Using (Part 1)

Discover how Pictory AI helps turn articles, scripts, and ideas into videos in minutes, with real use cases, benefits, and a 2026 perspective.

The State of Local LLMs (2024/2025): What Actually Changed

Local LLMs finally hit their stride in 2025. If you struggled with local AI last year, new tools and models make setup a coffee-break task, not a weekend project. Let's explore what actually changed.